Introduction

It’s been awhile since I last wrote, and figured now is as good of time as any to write about my recent set of tools and methodologies around the use of AI. In the near 8 months since I wrote last, a lot has changed and has been updated on this end. A lot of this article can have other articles created to dive into more details, but the purpose of this one is to talk about my current stack, and a hint at the direction I’ve been going on this. Most of my free time has been spent with AI since I last wrote.

High Level Architectural Overview

At a high level, I dedicated a machine to the purposes of AI, and really only, AI. The reason for this is part to compartmentalize my environment, but also because so much of what I’m doing is with AI. It’s a headless Debian server with the following specs:

- AMD Threadripper 2970WX 24-core

- 1Gb RAM

- ~8TB of disk space spread across an LVM (multiple disks)

- 2x (soon to be 3x) Nvidia A6000 ADA cards (~48Gb of RAM each card).

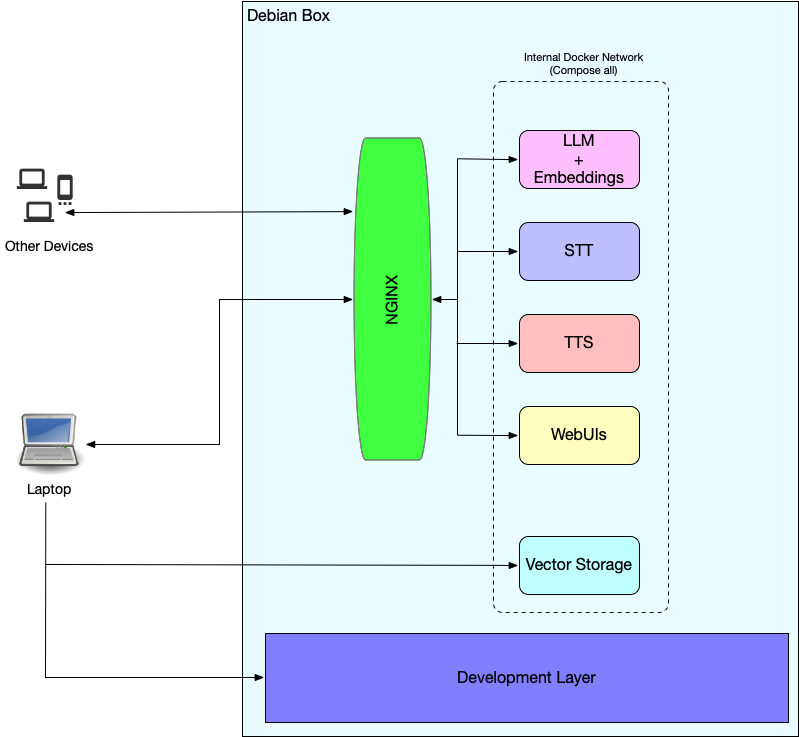

The following architectural diagram explains where I’ve been going with all this:

I make a heavy use of Docker to make all this work, and all the internal Docker components are on their own network. Many of the services are also exposed through nginx directly, minus the vector storage for a few reasons. The reason for the internal Docker network is so that each component can talk to each other without as many network hops. This is also because there’s quite a lot of integration between them as well. The advantage of the nginx layer is that I have my own subdomain internally, and each component sits behind SSL for external communication. The SSL is useful for certain components, but also increases security.

The remainder of this post discusses each component, and what I’m using and why.

LLM + Embedding

The heavy worker in my stack is the LLM and Embedding section. I run multiple models, including my own fine-tuned models. For the technology here, I decided to use LocalAI [1]. LocalAI is quite easy to setup, supports any GGUF models, and has a lot of built-in features. The primary areas I use in this include:

- GPT: This should be obvious, but this is the LLM portion. I used to run a wide array (like 30 or so) models, but drastically cut that down lately based off domain.

- Embeddings: Embeddings is used in vector storage databases. There are quite a few embedding models, and it’s a bit outside the scope for what I run, but the mteb leaderboard [2] is a good place to start.

STT

Speech to Text (STT) is used very heavily. I tend to get transcriptions for most of my meetings, and I have a pipeline that I developed to do STT. While LocalAI is perfectly capable of doing the STT itself using Whisper, there are some issues with it, and better options available. I decided to settle on a project called Faster Whisper [3]. Not only does it take less RAM, but it’s also blazing fast. An hour long recording can be processed in less than a minute using this. You can run it on the CPU in quite quick speeds too. The faster-whisper-server [4] can serve this model in an OpenAI compliant API.

TTS

Text to Speech (TTS) is a newer addition to my stack, and not a heavily used one. That said, it’s fun to play with and adds a dynamic to my WebUIs. LocalAI can also do TTS, but the backends are limiting. I spent multiple days working at training my own speech model, and didn’t like the results from the projects that LocalAI supported. I decided on OpenedAI Speech [5]. I found a bit better luck training the xtts model than I did Bark and RVC and Tortoise. I view this as temporary, and want to revisit this project long term, since even with the xtts model, I could use some improvements. The OpenedAI Speech project supports OpenAI-compliant endpoints.

WebUIs

I run two different WebUIs locally, and there’s multiple components to one of them. The reason for running two of them is each serve different purposes.

SillyTavern

SillyTavern [6] is a generic LLM frontend. It supports connections to a number of backends, in a number of different ways. You can use SillyTavern without the need to host an LLM on your own, as it can connect to various providers online. The thing that makes SillyTavern nice is you can use it for both role play as well as a generic LLM front-end. Role-playing with an LLM is actually a fantastic way to learn effective prompting and use of the AI.

Open WebUI and Pipelines

Open WebUI [7] is a project I recently came across. Previously, I was developing my own front ends using, at first, Streamlit and then using flask and react - but when I came across this project, I fell in love with it pretty much immediately. It’s a very generic interface. Out of the box, it supports a very similar feature set as SillyTavern does, but it better fits my needs from an expansion standpoint. One reason why I was developing my own front-end is I couldn’t find really anything that made it easy to add concepts such as RAG, workflows, and the like. Open WebUI was able to meet that need very well.

With the base Open WebUI package you have some of the following:

- Tools: These are “extensions” that can be run with the LLM of choice. An example of this is to do web searching as part of one’s interaction with the LLM.

- Functions: These fundamentally change the input and output to an LLM. For example, you can have the output of an LLM translated using Google Translate. Or, you can have code automatically run, or charts created, etc.

The nice thing about Open WebUI in this regard is there’s a good number of community contributions [8]. It has deeper integration with ollama, which can be good for those that don’t want to run LocalAI or want to run it on their laptop.

What makes Open WebUI even better, in my opinion, is Pipelines [9]. What makes pipelines better than the using the base Open WebUI is really a separation of concerns. The way that Pipelines work is that they basically show up as a “model” for selection. What you work on from there utilizes the pipeline code that you can easily build. This is really powerful in RAG, or when you have a complex operation (e.g. multiple AI calls, file system or other work). Since it’s designed to allow for packages, as required for the pipeline, to be installed it’s great.

Another nice thing about Pipelines is that it acts entirely independently of Open WebUI. It responds to OpenAI endpoints, so if you’re using the Python module, your interaction with it is just like any other model directly. Debugging is a bit more of a challenge, but you can spin up a local Pipelines environment, work on it there, before deploying the pipeline to your stack.

Vector Storage

Vector Storage is an incredibly important portion of one’s stack if you’re dealing with documents. One of the best solutions I found for this is Weaviate [10] if doing anything at “scale”. Weaviate deserves an entire post dedicated to it alone, it’s that big of a project. But, weaviate handles a lot of the “glue” that would normally be required with something like Chroma. In Chroma, you’d need to take the search query, vectorize it yourself, then do the search, collating, and so on before passing to the LLM. With Weaviate, it’s just “easier”, you send the document and metadata and it can handle all the vectorization and all on its own. When searching, it’s a similar process - you send the search query, and get what you want. For my uses, it came down to it being easy and fast.

Weaviate can also handle most operations on its own. Meaning, the actual vectorization can be handled in multiple ways. You can either use a provider such as OpenAI, or host them locally [11]. It’s easy to expand even their own local options if you prefer another embedding model. That said, I use the LocalAI embedding, for my purposes.

In my stack, it’s the one service that doesn’t go through nginx as shown in the architectural diagram above. The reason for this is because of the gRPC layer this also works with.

This is also, by far, one of the best documented projects I’ve come across.

Development Layer

The AI machine is also used for remote development, but it’s worth clarifying what I mean by “development” in this regard. I write a lot of interactions with LLMs (and all the components mentioned above) using LangChain and base Python OpenAI. These are tools, and are version controlled and run from the command line or in Streamlit applications. This type of development is done on my laptop, because it doesn’t require transformers or anything like that. The heavy lifting is done on the AI machine for running what the tool sends it.

The development that is actually done on the AI machine really revolves around a few categories:

- Fine-tuning models: I do a fair amount of fine-tuning, mostly around LLMs. I’ve used the unsloth [12] project for many of these. I’ve also fine-tuned my own voice models, vision-based models, etc.

- Bulk-imports/long-operations: Anything that is going to take many hours to complete, I offload here.

These are either run in a tmux terminal, or using PyCharm remote development, depending on what I’m doing. Output for all this tends to go to a shared TennsorBoard area so I can view all my runs.

Conclusion

I hope this is useful for anyone who wants to emulate or try anything listed here. I’ll give a small (but tentative) mention to a project that lists some tools, “Awesome AI Tools” [13]. It may be worth pursing around at least the pull requests and some of what they mention. I’m not a huge fan of this repository because there’s a lot of non-open-source things mentioned, the lack of pull-request approvals, and the paid-sponsorship under recommended tools. That said, it can be useful to some. Another way to find useful tools is to get involved in various projects. Tools like Open WebUI, I wouldn’t have become aware of, if it wasn’t for being a part of some of the HuggingFace model groups.

References

- Homepage - LocalAI

- HuggingFace - MTEB Leaderboard

- Github - faster-whisper

- Github - Faster Whisper Server

- Github - OpenedAI Speech

- Github - SillyTavern

- Open WebUI - Documents

- Open WebUI - Homepage

- Github - Open WebUI Pipelines

- Weaviate - Homepage

- Weaviate - Documentation - Locally Hosted Transformers Text Embeddings + Weaviate

- Github - unsloth

- Github - Awesome AI Tools